How MuninnDB works

Three ideas from cognitive neuroscience, implemented as first-class database primitives. No LLM required — the math runs on every read and write.

The fundamental unit: the Engram

An engram is a neuroscience term for a physical memory trace in the brain. In MuninnDB, it's the unit of storage — think of it as a "row" that knows how relevant it is, how confident we are in it, and what other engrams it's related to.

Three ideas from neuroscience.

One database that implements them.

These aren't features bolted on top — they're the storage engine itself. The math runs on every read and write.

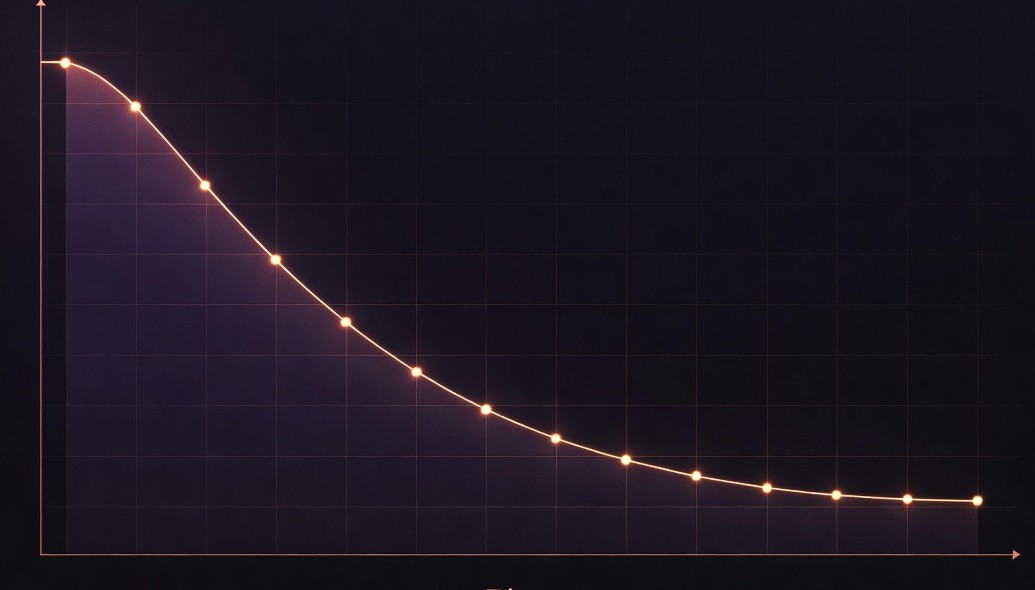

Decay

Memories fade — just like real ones.

Every engram (memory) has a relevance score that naturally fades over time using the Ebbinghaus forgetting curve — the same math that describes how human memory works. Old, unused memories drift toward a background floor. Fresh, frequently-accessed memories stay strong.

Why it matters: Your agent's context stays fresh. Stale information fades naturally — no manual cleanup required.

Continuous decay worker runs Ebbinghaus formula: R(t) = max(floor, e^(−t/S)) where S = stability grown through spaced retrieval. Default decay floor 0.05 — memories never fully vanish.

Hebbian Learning

Related memories grow stronger together.

When two memories are retrieved together — because both were relevant to your query — their association automatically strengthens. The more often two engrams fire together, the stronger their bond. This is "neurons that fire together, wire together" — Hebbian learning — without any LLM involved.

Why it matters: Your agent learns what concepts belong together without you telling it. Context awareness emerges automatically from usage patterns.

Co-activation log (ring buffer, 200 entries) feeds Hebbian worker. Weight update: new_weight = old_weight × (1 + boost_factor). Bidirectional — unused associations weaken symmetrically.

Semantic Triggers

The database pushes — you don't have to pull.

Subscribe to a semantic context, and MuninnDB will push a notification to your agent the moment a matching memory becomes highly relevant. No polling. No scanning. The database watches for relevance changes and delivers results to you — like an alert system for knowledge.

Why it matters: Your agent gets critical context at the right moment — not when it happens to query. Proactive intelligence instead of reactive polling.

Triggers evaluated against active engrams after decay/Hebbian cycles. Semantic matching via embedding cosine similarity or BM25 FTS. Push via WebSocket, SSE, or callback. Rate-limited per vault.

The ACTIVATE pipeline — 6 phases in <20ms

When you call ACTIVATE with a context string, MuninnDB runs a parallel 6-phase pipeline to find the most cognitively relevant engrams.

Embed + Tokenize

Convert your context string to embeddings and BM25 tokens simultaneously.

Parallel Retrieval

3 goroutines: FTS candidates (BM25), vector candidates (HNSW cosine), decay-filtered pool.

RRF Fusion

Reciprocal Rank Fusion merges the three result lists into one coherent ranking.

Hebbian Boost

Co-activation weights increase scores for engrams frequently retrieved together.

Graph Traversal

BFS graph walk (depth 2) surfaces associated engrams with hop penalty scoring.

Score + Why

Final composite score, Why builder (explains each result), streaming response.

System layers

Start in 5 minutes.

No ops. No dependencies.

Download a single binary, run it, and your AI agent has a cognitive memory system. When you're ready to scale, it scales with you.

Apache 2.0 · Open Source · No account required