Sub-20ms cognitive queries. Single binary. Zero dependencies. Memory that decays, learns, and notifies — automatically.

// Store a memory — it decays, learns, and triggers automatically

mem := muninn.NewMemory("api-key", "muninn://localhost:8747")

engram, _ := mem.Store(ctx, &muninn.StoreRequest{

Concept: "user prefers dark mode",

Content: "Always use dark theme in UI responses",

Tags: []string{"preference", "ui"},

})

// Activate — finds relevant memories in <20ms

results, _ := mem.Activate(ctx, "what does the user want?", 5)

# Store a memory — it decays, learns, and triggers automatically

import muninn

mem = muninn.Memory("api-key", "muninn://localhost:8747")

engram = mem.store(

concept="user prefers dark mode",

content="Always use dark theme in UI responses",

tags=["preference", "ui"],

)

# Activate — finds relevant memories in <20ms

results = mem.activate("what does the user want?", limit=5)

The world has built AI models that think in real-time, then stored their memories in databases designed for invoices. The mismatch is costing you.

Every AI team stitches memory together from Redis, Postgres, and a vector store. None of these were built for memory — they're storage systems wearing a costume.

A fact from six months ago sits in your DB with the same weight as something from yesterday. Storage systems have no concept of relevance over time. Your AI agent's context gets cluttered.

Traditional databases are passive. You pull data when you ask. They never push data when something becomes relevant. Your AI agent is flying blind between queries.

"Memory isn't storage.

It's a living system."

Your brain doesn't store memories like a hard drive. They strengthen when recalled, fade when ignored, connect to related ideas automatically, and surface unbidden when suddenly relevant. MuninnDB brings these same properties to your database — not as features, but as the foundation.

Named after Muninn — Odin's raven of memory in Norse mythology. Learn the mythology →

These aren't features bolted on top — they're the storage engine itself. The math runs on every read and write.

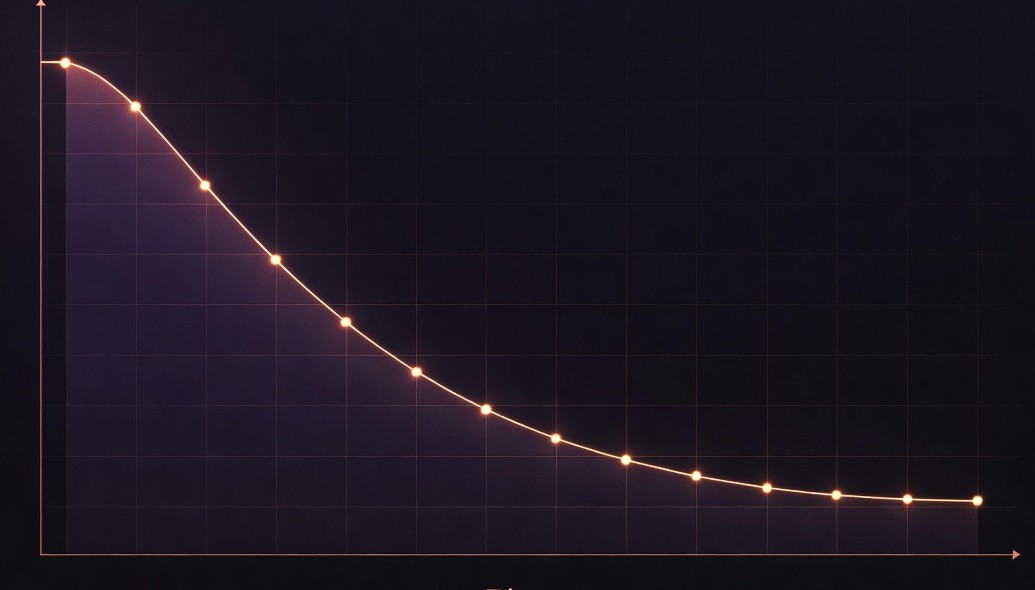

Memories fade — just like real ones.

Every engram (memory) has a relevance score that naturally fades over time using the Ebbinghaus forgetting curve — the same math that describes how human memory works. Old, unused memories drift toward a background floor. Fresh, frequently-accessed memories stay strong.

Why it matters: Your agent's context stays fresh. Stale information fades naturally — no manual cleanup required.

Continuous decay worker runs Ebbinghaus formula: R(t) = max(floor, e^(−t/S)) where S = stability grown through spaced retrieval. Default decay floor 0.05 — memories never fully vanish.

Related memories grow stronger together.

When two memories are retrieved together — because both were relevant to your query — their association automatically strengthens. The more often two engrams fire together, the stronger their bond. This is "neurons that fire together, wire together" — Hebbian learning — without any LLM involved.

Why it matters: Your agent learns what concepts belong together without you telling it. Context awareness emerges automatically from usage patterns.

Co-activation log (ring buffer, 200 entries) feeds Hebbian worker. Weight update: new_weight = old_weight × (1 + boost_factor). Bidirectional — unused associations weaken symmetrically.

The database pushes — you don't have to pull.

Subscribe to a semantic context, and MuninnDB will push a notification to your agent the moment a matching memory becomes highly relevant. No polling. No scanning. The database watches for relevance changes and delivers results to you — like an alert system for knowledge.

Why it matters: Your agent gets critical context at the right moment — not when it happens to query. Proactive intelligence instead of reactive polling.

Triggers evaluated against active engrams after decay/Hebbian cycles. Semantic matching via embedding cosine similarity or BM25 FTS. Push via WebSocket, SSE, or callback. Rate-limited per vault.

Apache 2.0. Core engine is free and open forever. No cloud lock-in.

github.com/scrypster/muninndb →Native MCP integration. Works with Claude, Cursor, and any MCP-compatible agent out of the box.

See MCP integration →Single binary. No Docker, no Redis, no external services. Download, run, use.

Start in 5 minutes →These aren't missing features. These features didn't exist in a database before MuninnDB — because they were never designed for cognitive memory.

| Capability | PostgreSQL | Redis | Pinecone | Neo4j | ★ cognitive MuninnDB |

|---|---|---|---|---|---|

| Memory decay (Ebbinghaus) | ✗ | ✗ | ✗ | ✗ | ✓ |

| Hebbian auto-learning | ✗ | ✗ | ✗ | ✗ | ✓ |

| Semantic push triggers | ✗ | ✗ | ✗ | ✗ | ✓ |

| Bayesian confidence | ✗ | ✗ | ✗ | ✗ | ✓ |

| Full-text search (BM25) | ✓ | ✗ | ✗ | ✗ | ✓ |

| Vector / semantic search | ✗ | ✗ | ✓ | ✗ | ✓ |

| Graph traversal | ✗ | ✗ | ✗ | ✓ | ✓ |

| Zero external dependencies | ✗ | ✗ | ✗ | ✗ | ✓ |

| Single binary deploy | ✗ | ✓ | ✗ | ✗ | ✓ |

Comparisons reflect core native capabilities. PostgreSQL pgvector extension provides limited vector support but no cognitive primitives.

Cognitive doesn't mean slow. MuninnDB runs its full 6-phase activation pipeline — embedding, BM25, vector search, RRF fusion, Hebbian boost, and graph traversal — in under 20ms.

| Tier | Engrams | Disk | Deployment |

|---|---|---|---|

| Personal | 10K | 17–40 MB | Single binary |

| Power User | 100K | 170–400 MB | Single binary |

| Team | 1M | 1.7–4 GB | Single node |

| Enterprise | 100M+ | 170–400 GB | Sharded cluster |

No Docker required. No cloud accounts. No dependencies to install. Just download and run.

# macOS / Linux

curl -sSL https://get.muninndb.com | sh

# Or build from source

git clone https://github.com/scrypster/muninndb

cd muninndb && go build ./cmd/muninndb# Start the server (port 8747 MBP, 8749 REST, 8750 UI)

muninndb serve

# Open the web UI

open http://localhost:8750import "github.com/scrypster/muninndb/sdk/go/muninn"

mem := muninn.NewMemory("your-api-key", "muninn://localhost:8747")

// Store a memory

mem.Store(ctx, &muninn.StoreRequest{

Concept: "user prefers concise replies",

Content: "Keep responses under 200 words when possible",

Tags: []string{"preference", "style"},

})

// Activate relevant memories

results, _ := mem.Activate(ctx, "how should I respond?", 5)Every AI application eventually needs persistent memory. The question is whether you want to manage it manually or let the database handle the cognitive work.

Stop stitching together Redis, Postgres, and a vector store. MuninnDB gives your agent one endpoint that stores, recalls, decays, and learns — automatically. Your agent remembers what it needs, forgets what it doesn't.

Every activation includes a "Why" score showing exactly which memories surfaced and why. Confidence is tracked. Contradictions are detected. You can edit, correct, or archive any memory at any time. Compliance-ready from day one.

Every cognitive primitive is exposed as plain math. No black boxes. Watch Hebbian weights evolve. Observe decay curves. Subscribe to confidence updates. MuninnDB is a laboratory for studying how artificial cognitive memory behaves at scale.

Download a single binary, run it, and your AI agent has a cognitive memory system. When you're ready to scale, it scales with you.

Apache 2.0 · Open Source · No account required